Standalone Cluster

is the simplest mode for spark to run in true cluster mode. This uses spark's native master, worker and executors.

Submitting spark jobs to Standalone cluster

spark-submit --class

Spark Master

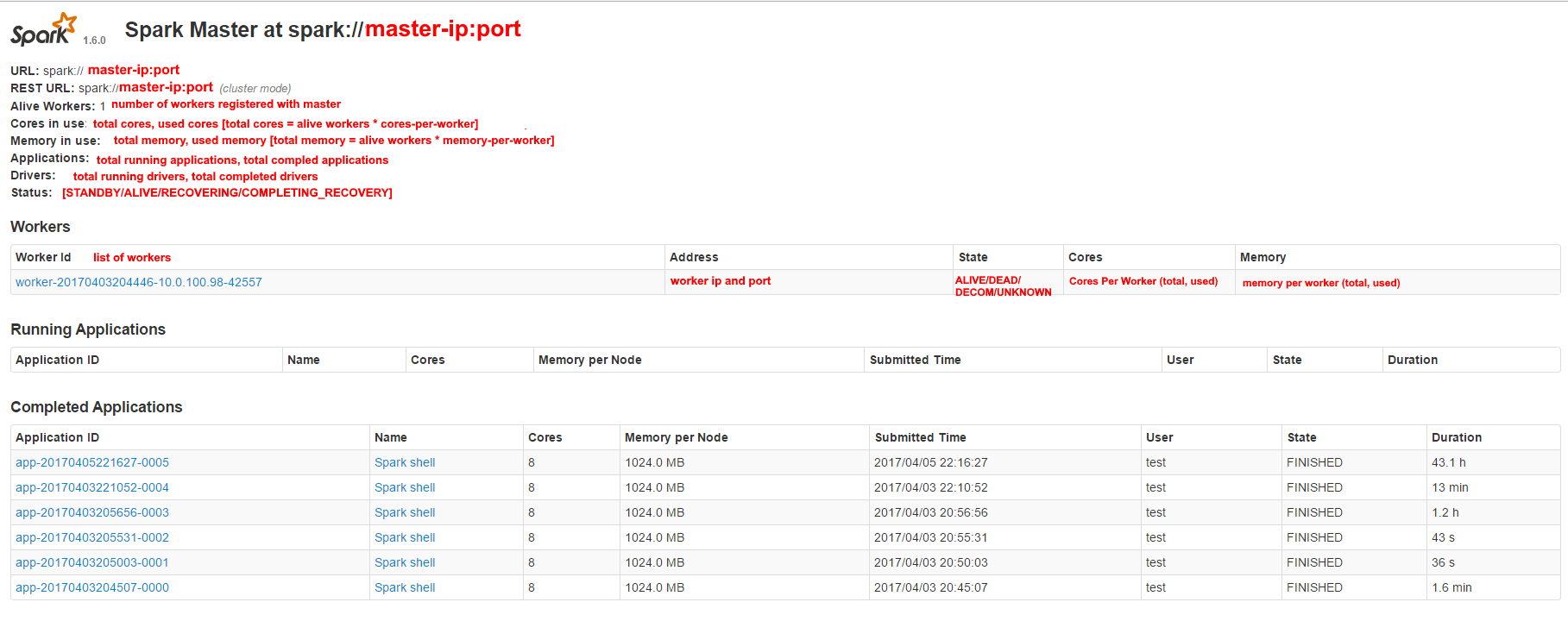

Spark master is the Resource Manager in Standalone Cluster. It has following responsibilities

- Keeping track of workers, applications (completed, running, waiting), drivers

- Scheduling of executors by talking to workers.

Spark master states

- STANDBY : initializing state for new master

- ALIVE : active master

RECOVERING: master initalizing HA (High Availability) process

COMPLETING_RECOVERY: master HA process completing

Spark Master Rest API

Spark master has a undocumented REST API which provides following interfaces

- [POST] /v1/submissions/create : submit new spark Apps to the standalone cluster

- [GET] /v1/submissions/status/driver-<appid> : get status of a submitted application

- [POST] /v1/submissions/kill/driver-<appid> : kill a submitted application.

For more details on payload and response formats, refer to this excellent Apache Spark’s Hidden REST API

Spark Master UI unique features

Spark UI is covered in depth later, but here we will cover some UI features which are not found in other modes

Spark Master UI

Available on http://<masterip:8080>; . 8080 is the default port, but could be changed by the following setting in

conf/spark-env.sh

SPARK_MASTER_WEBUI_PORT = 8080

This UI server is not available in any other modes of spark deployment.

The main parts of spark master UI are called out below.

Spark Worker

Spark Worker States

- ALIVE

- DEAD

- DECOMISSIONED

- UNKNOWN